Data engineering is a field in constant motion. While Python remains the dominant language for many data scientists and engineers, the constantly increasing complexity and volume of data demand a comprehensive set of data engineering tools that goes beyond standard libraries.

This guide explores essential data engineering tools, covering data collection, transformation, storage, orchestration, and performance enhancement. This article will empower data engineers to tackle diverse challenges and optimize their workflows.

Why Python is the Go-To Language for Data Engineering

Python has emerged as the go-to language for data engineering, thanks to its unique combination of simplicity, versatility, and a comprehensive library ecosystem. Python’s clear syntax and readability make it accessible to both beginners and experienced engineers, allowing for faster development and easier collaboration across teams.

The strength of Python lies in its extensive collection of libraries, which cater to virtually every aspect of data engineering. Whether you need to clean and manipulate data, scale your operations, or interact with SQL databases, Python provides powerful, well-maintained tools that can handle the task. For engineers working with large-scale data, Python’s seamless integration with big data platforms further enhances its appeal, enabling efficient processing of massive datasets.

Python’s active and vibrant community is another key factor in its dominance. The continuous development of new libraries and frameworks ensures that Python remains at the cutting edge of data engineering, adapting to new challenges and technologies as they arise. This ongoing innovation makes Python an adaptable and future-proof choice for data engineers, whether they are dealing with structured or unstructured data, batch or real-time processing.

Data Ingestion Tools

Data ingestion is the starting point of any data engineering process. It’s where raw data from various sources is gathered and brought into your pipeline for further processing. Python offers a variety of tools tailored to different data ingestion needs, from handling APIs to web scraping.

Handling APIs with requests

When it comes to interacting with APIs, the requests library is a must-have. It’s a simple yet powerful tool for making HTTP requests, allowing you to fetch data from REST APIs or interact with web services. With its intuitive interface, requests makes it easy to send GET, POST, and other types of requests, making data retrieval from external sources seamless and straightforward.

Web Scraping with BeautifulSoup4 and Scrapy

For web scraping, Python shines with libraries like BeautifulSoup4 and Scrapy. BeautifulSoup4 is invaluable when you need to pull data out of HTML and XML files. It’s particularly useful for parsing and navigating through complex web content, making it an essential tool for smaller web scraping projects. On the other hand, for large-scale web scraping tasks, Scrapy offers a fast, high-level framework that handles everything from data extraction to storing the scraped data. Its robust architecture is designed to manage and scale extensive web scraping projects efficiently.

Library/Framework | Description | Use Cases |

|---|---|---|

requests | An elegant and simple HTTP library for making API calls | Fetching data from REST APIs, web scraping |

Beautiful Soup 4 | A library for pulling data out of HTML and XML files | Web scraping, parsing HTML/XML content |

Scrapy | A fast, high-level web crawling and scraping framework for extracting data from websites

| Large-scale web scraping projects |

By combining these tools, Python provides a versatile toolkit for ingesting data from a wide range of sources, ensuring that your data pipeline starts with clean, well-organized input.

Data Transformation and Cleaning

Once data has been ingested, the next critical step in the data engineering pipeline is usually transformation and cleaning. This process involves converting raw data into a format that’s suitable for analysis, ensuring that the data is accurate, consistent, and free from errors. Python excels in this area, offering a range of powerful libraries designed specifically for data manipulation and transformation.

Pandas: The Workhorse of Data Manipulation

At the forefront is Pandas, the go-to library for data engineers working with tabular data. Pandas provides high-level data structures like DataFrames, which are incredibly versatile for data manipulation. Whether you’re merging datasets, handling missing values, or performing complex group operations, Pandas offers a wide array of functions to clean and transform data.

Dask: Scaling Dataframes for Big Data

For larger datasets that can’t fit into memory, Dask is a useful tool. Dask extends Python’s capabilities by enabling parallel computing, allowing you to scale your data processing across multiple cores or even a cluster of machines. This makes Dask an excellent choice for big data projects where performance and scalability are important.

Numerical Data Processing with NumPy

When it comes to numerical data, NumPy serves as the foundation for many of Python’s data processing libraries. It provides efficient, array-based computations, making it ideal for tasks that require fast math operations on large datasets.

Library/Framework | Description | Use Cases |

|---|---|---|

Pandas | The Swiss Army knife of data analysis, providing easy-to-use data structures and data analysis tools | Reading/writing data from various formats, data cleaning, manipulation, exploratory data analysis |

Dask | A flexible library for parallel computing in Python | Scaling Python code to large datasets, parallel data processing |

NumPy | The fundamental package for scientific computing with Python | Numerical operations, array manipulation, mathematical functions |

Together, these tools form a comprehensive toolkit for data transformation and cleaning, allowing data engineers to efficiently prepare data for analysis, no matter the size or complexity of the dataset.

Data Pipeline Automation

As data pipelines grow in complexity, manual management becomes increasingly impractical. This is where data pipeline automation tools come into play, streamlining the process of building, scheduling, and monitoring workflows. Python offers several powerful tools that allow data engineers to automate these tasks, ensuring that data flows smoothly from one stage of the pipeline to the next.

Luigi: Building Complex Pipelines

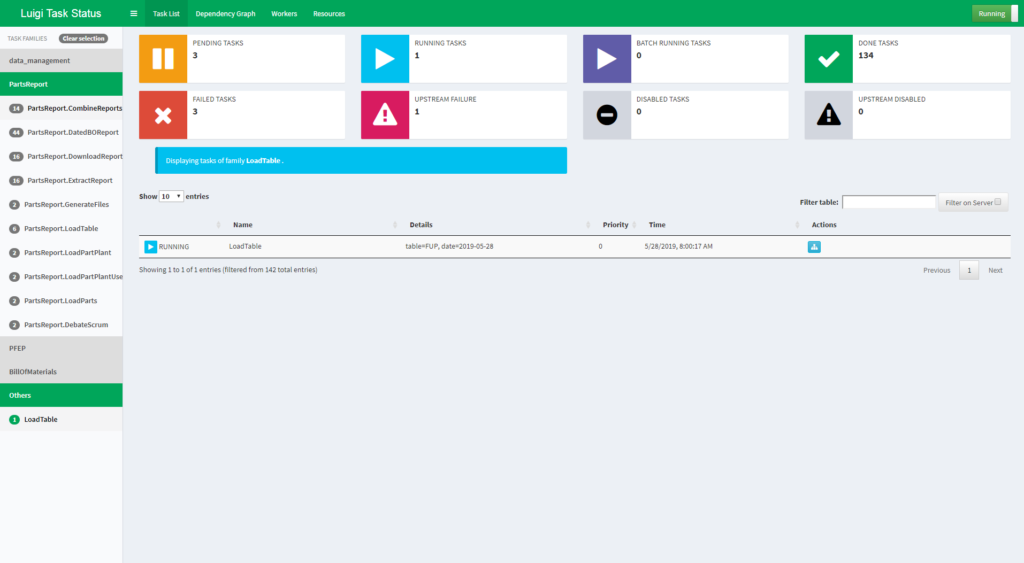

Luigi is a popular choice for building complex pipelines. Developed by Spotify, Luigi helps you manage dependencies between different tasks, ensuring that each task runs in the correct order. It’s particularly useful for constructing complex workflows where multiple tasks must be executed in sequence or in parallel. Luigi’s focus on task dependency management makes it an excellent tool for building and maintaining data pipelines that involve multiple steps and large datasets.

Example Luigi interface. Credit: luigi.readthedocs.io/

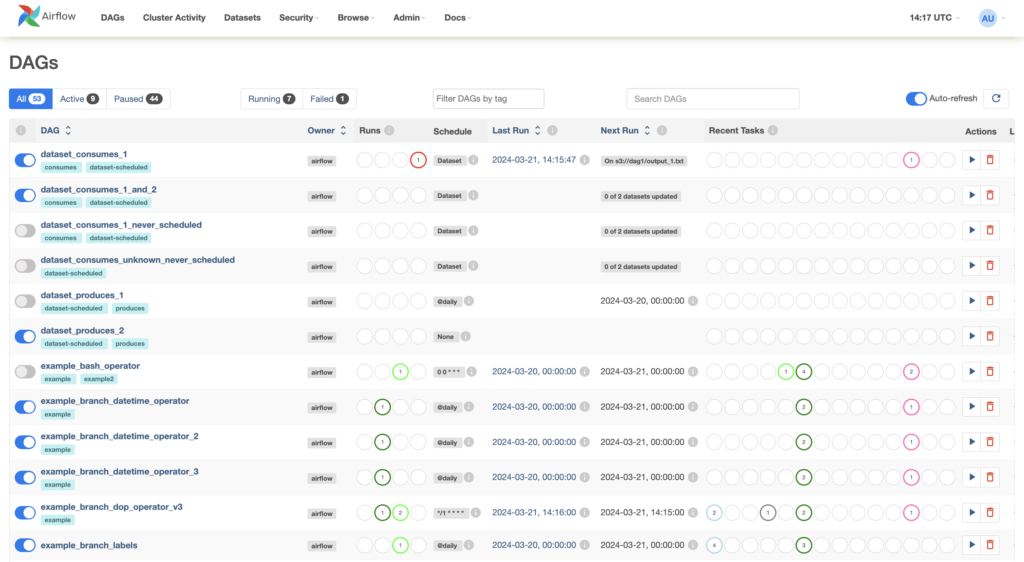

Airflow: Managing Workflow Automation

For more comprehensive workflow management, Apache Airflow stands out as a robust solution. Airflow allows you to programmatically author, schedule, and monitor workflows using Python code. Its Directed Acyclic Graph (DAG) structure lets you define tasks and their dependencies clearly, making it easier to manage complex data workflows. Airflow’s scalability and flexibility make it ideal for managing large-scale ETL (Extract, Transform, Load) processes, automating repetitive tasks, and integrating with various data sources.

Example of Airflow UI. Credit: airflow.apache.org

Both Luigi and Apache Airflow are essential tools in the data engineer’s toolkit, providing the automation capabilities needed to handle the increasingly complex and data-intensive workflows that modern data engineering demands.

Data Storage Solutions

Storing data efficiently is a critical aspect of data engineering, as the choice of storage solution directly impacts performance, scalability, and accessibility. Python provides powerful tools for interacting with both SQL and NoSQL databases, allowing data engineers to choose the right storage solution based on the type of data and the specific requirements of their projects.

Interacting with SQL Databases using SQLAlchemy

For structured data that fits neatly into tables and follows a predefined schema, SQL databases are often the preferred choice. SQLAlchemy is a versatile Python library that provides a comprehensive toolkit for working with SQL databases. It offers a high-level ORM (Object-Relational Mapping) layer that abstracts the complexities of database interactions, making it easier to query, insert, and update records. SQLAlchemy supports a wide range of relational databases, including PostgreSQL, MySQL, and SQLite, making it a versatile tool for data engineers working with SQL databases.

Working with NoSQL Databases like MongoDB

On the other hand, when dealing with unstructured or semi-structured data that requires flexible schemas, NoSQL databases like MongoDB come into play. MongoDB is a document-oriented database that stores data in JSON-like formats, making it ideal for handling large volumes of diverse data types. Python’s integration with MongoDB through libraries like pymongo allows data engineers to easily interact with NoSQL databases, enabling efficient data storage and retrieval in scenarios where traditional SQL databases might not be suitable.

Together, these tools allow data engineers to design and manage data storage solutions that are both flexible and scalable, ensuring that the right database technology is used for the right kind of data.

Data Serialization and Formats

Efficient data storage and transfer are essential components of any data engineering workflow. Data serialization formats play a crucial role in ensuring that data is stored and transferred in a compact, structured, and consistent manner. Python offers a range of libraries that make it easy to work with various serialization formats, catering to different use cases and data types.

Working with JSON and XML

For many applications, JSON (JavaScript Object Notation) is the go-to serialization format due to its lightweight and human-readable structure. Python’s json module makes it easy to encode and decode data in JSON format, making it ideal for API communication, configuration files, and data exchange between systems. XML (eXtensible Markup Language) is another widely used format, especially in enterprise applications where a more rigid, schema-based structure is required. Python’s xml libraries, such as ElementTree, allow data engineers to parse, modify, and generate XML data, providing robust support for handling XML-based workflows.

Efficient Data Storage with Parquet and Avro

When dealing with large datasets, especially in big data environments, more efficient serialization formats like Parquet and Avro are often preferred. Parquet is a columnar storage format that provides excellent compression and performance, making it ideal for analytical workloads. It’s particularly effective when working with large datasets that need to be stored and queried efficiently. Python’s integration with Parquet through libraries like pyarrow allows data engineers to read and write Parquet files with ease.

Avro is another high-performance serialization format that is widely used in data processing pipelines, particularly for streaming data. It is both a serialization format and a data exchange protocol, supporting schema evolution, which is critical in environments where data structures may change over time. Python’s fastavro library provides tools for working with Avro files, enabling data engineers to handle data serialization and deserialization efficiently.

By using these serialization formats, Python empowers data engineers to store, transfer, and process data in a way that maximizes efficiency and minimizes storage costs, ensuring that data pipelines remain performant and scalable.

Data Quality and Validation

Ensuring the quality of data is a fundamental aspect of data engineering, as poor data quality can lead to inaccurate analyses, flawed decisions, and ultimately costly mistakes. Python provides a range of tools that help data engineers maintain and validate data quality throughout the pipeline.

Ensuring Data Quality with Great Expectations

Great Expectations is one of the leading open-source tools for data validation and quality assurance. It allows data engineers to define “expectations” or rules that data must meet. These expectations can cover a wide range of data quality checks, such as verifying the presence of required fields, checking that data types are correct, or ensuring that values fall within expected ranges. Once these expectations are defined, Great Expectations automatically tests the data against them, identifying any inconsistencies or anomalies.

What sets Great Expectations apart is its ability to generate detailed, human-readable documentation that describes the data’s quality and the checks that have been performed. This not only helps in detecting and addressing data quality issues early in the pipeline but also provides a clear audit trail, which is essential for compliance and reporting purposes.

Proactive Data Validation with Pandera

Another powerful tool for maintaining data quality is Pandera. Pandera integrates seamlessly with Pandas DataFrames, enabling data engineers to enforce schema constraints directly within their data processing workflows. With Pandera, you can define schemas that specify the structure, data types, and constraints of your DataFrames. These schemas are then used to validate the data as it flows through the pipeline, catching errors such as unexpected data types, missing values, or out-of-range values before they propagate further.

Data Monitoring and Logging

Effective monitoring and logging are critical components of a well-maintained data pipeline. They ensure that data engineers can track the flow of data, detect issues in real-time, and maintain the reliability and performance of their data workflows. Python provides robust tools and libraries for implementing comprehensive monitoring and logging strategies.

Best Practices for Logging with Python’s Logging Module

Logging is essential for understanding what happens within your data pipelines, especially when things go wrong. Python’s built-in logging module offers a flexible and configurable way to record information about your application’s execution. By strategically placing logging statements throughout your code, you can capture critical information, such as the start and end of data processing tasks, the occurrence of errors, and the status of important operations.

When setting up logging, it’s important to define different log levels (such as DEBUG, INFO, WARNING, ERROR, and CRITICAL) to categorize the severity of events. This allows you to filter and prioritize logs based on their importance. Additionally, logging can be configured to output to various destinations, including the console, files, or external logging services, making it easier to centralize and analyze logs.

Monitoring with Prometheus and Grafana

While logging provides a historical record of events, real-time monitoring is essential for proactive management of data pipelines. Prometheus is a powerful open-source monitoring system that collects metrics from various sources and stores them in a time-series database. It is widely used for monitoring the performance and health of data pipelines, allowing data engineers to track key metrics such as processing times, resource usage, and error rates.

Paired with Grafana, a popular open-source visualization tool, Prometheus enables data engineers to create real-time dashboards that display critical metrics in an easily digestible format. Grafana’s customizable dashboards provide insights into the operational status of your data pipelines, making it easier to identify and address issues before they escalate.

An example dashboard. Credit: grafana.com

Together, Python’s logging module and the combination of Prometheus and Grafana offer a comprehensive solution for monitoring and logging in data engineering. By implementing these tools, data engineers can ensure that their pipelines are running smoothly, efficiently, and with minimal downtime.

Data Engineering in the Cloud

As data volumes grow and the need for scalability becomes more pressing, many organizations are turning to cloud platforms to handle their data engineering workloads. Cloud environments provide the flexibility, scalability, and tools needed to manage large datasets and complex pipelines efficiently. Python’s extensive ecosystem includes robust support for cloud services, making it easier for data engineers to use the power of the cloud.

Working with AWS, GCP, and Azure

Each of the major cloud platforms—Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure—offers a suite of services tailored to data engineering. Python’s SDKs for these platforms, such as boto3 for AWS, google-cloud for GCP, and azure-sdk for Azure, provide seamless integration with their respective services.

- AWS: With services like Amazon S3 for storage, AWS Glue for ETL, and Redshift for data warehousing, AWS is a popular choice for data engineering in the cloud. boto3, the AWS SDK for Python, allows you to interact programmatically with all AWS services, enabling you to build and automate complex data workflows that use the full power of AWS’s ecosystem.

- GCP: Google Cloud Platform offers powerful data engineering tools like BigQuery for data warehousing, Dataflow for stream and batch processing, and Cloud Storage for scalable object storage. The google-cloud library enables Python developers to easily interact with GCP services, making it straightforward to build and manage data pipelines in the cloud.

- Azure: Microsoft Azure provides comprehensive data engineering solutions, including Azure Data Lake for scalable storage, Azure Synapse Analytics for integrated analytics, and Azure Data Factory for orchestrating data workflows. The azure-sdk library gives Python developers access to these services, allowing them to construct, deploy, and manage data engineering workflows in the Azure cloud.

Scaling Data Engineering Workloads in the Cloud

One of the main advantages of using cloud platforms is the ability to scale resources dynamically based on the workload. Python’s cloud SDKs make it easy to take advantage of this scalability by allowing you to provision resources as needed, automate scaling operations, and integrate with cloud-native services for monitoring and optimization.

Whether you’re processing large datasets, running machine learning models, or orchestrating complex ETL pipelines, the cloud offers a robust, scalable environment for data engineering. Python’s seamless integration with cloud services ensures that data engineers can build flexible, scalable, and cost-effective solutions to meet the demands of modern data workloads.

Best Practices for Data Engineering with Python

To build robust and efficient data pipelines, following best practices in data engineering is crucial. These practices ensure that your workflows are not only reliable and scalable but also maintainable over time. Python, with its flexibility and extensive library ecosystem, provides ample opportunities to implement these best practices effectively.

1. Modular Code Design

One of the fundamental principles in data engineering is modularity. Breaking down your code into smaller, reusable components makes it easier to manage and scale your projects. By organizing your code into functions, classes, and modules, you can create clean, maintainable, and testable workflows. Python’s support for modular programming encourages this approach, allowing you to build complex pipelines that are easy to debug and extend.

2. Efficient Data Processing

Data engineering often involves processing large volumes of data, and efficiency is key to ensuring that your pipelines run smoothly. Utilizing Python libraries like Pandas and Dask can help you write efficient data processing code. However, it’s important to profile and optimize your code regularly to avoid bottlenecks. Tools like cProfile and line_profiler can assist in identifying slow sections of your code, enabling you to make necessary optimizations.

3. Use of Version Control

Version control is an essential practice for managing changes to your codebase, especially in collaborative environments. Git is the most commonly used version control system, and integrating it into your data engineering workflows helps track changes, collaborate with others, and roll back to previous versions if needed. Tools like GitHub or GitLab provide additional features such as pull requests and issue tracking, which enhance collaboration and code quality.

4. Automated Testing

Automated testing is a key practice that ensures the reliability of your data pipelines. Writing unit tests for your code and incorporating them into your continuous integration (CI) pipeline can help catch errors early in the development process. Python’s unittest and pytest frameworks provide powerful tools for writing and running tests, ensuring that your code behaves as expected and can handle edge cases.

5. Documentation and Code Comments

Well-documented code is easier to understand, maintain, and extend. Providing clear documentation and comments within your code helps others (and your future self) to quickly grasp the purpose and functionality of each component. Python’s docstrings and tools like Sphinx can be used to generate comprehensive documentation directly from your code, making it easier to maintain consistency across your project.

6. Continuous Learning and Adaptation

The field of data engineering is rapidly evolving, with new tools, technologies, and best practices emerging regularly. Engage with the Python community, attend conferences, participate in webinars, and explore new libraries and frameworks to keep your knowledge up-to-date and your workflows optimized.

7. Performance Optimization and Scaling

As your datasets grow and your data processing tasks become more complex, performance optimization and scaling become critical concerns. This is where specialized data engineering tools like Exaloop come into play. Exaloop is designed to turbocharge Python for data-intensive workloads, allowing you to achieve orders-of-magnitude improvements in execution speed.

Exaloop’s optimized libraries—which include NumPy, Pandas, and scikit-learn—are fully compiled, parallelizable and can even leverage GPUs. This means you can use the familiar Python syntax you know and love while enjoying the performance benefits of lower-level languages like C++.

Tool | Description | Use Cases |

|---|---|---|

Exaloop | A high-performance Python platform that accelerates data processing and machine learning workloads | Scaling Python code for large datasets, accelerating machine learning pipelines, reducing development and deployment costs, simplifying infrastructure management |

Furthermore, Exaloop provides a seamless interface to cloud computing resources, allowing you to scale your data engineering tasks effortlessly. It offers a convenient environment for developing, testing, and deploying your Python code. And with the built-in AI features, you can streamline your coding process with intelligent code suggestions and debugging support.

Incorporating Exaloop into your data engineering toolkit lets you break through typical performance bottlenecks, reduce development costs, and accelerate time to market. Try Exaloop today to accelerate your Python projects.

Conclusion: Building a Robust Data Engineering Toolkit

The Python data engineering landscape is in constant flux, with new tools and technologies emerging regularly. Staying ahead of the curve necessitates a growth mindset and a willingness to explore the latest advancements.

The data engineering tools discussed in this guide provide a solid foundation, but they are just a starting point. As you build your Python data engineering toolkit, prioritize tools tailored to your specific needs and adaptable to changing requirements. By combining core libraries, specialized tools, and innovative platforms like Exaloop, you can create a powerful and versatile data engineering stack that empowers you to overcome any challenge.

Try Exaloop to break through performance bottlenecks and accelerate your Python data engineering workflows.

FAQs

What are the must-have data engineering tools for Python beginners?

Focus on mastering the fundamentals first. Pandas, with its intuitive data structures and powerful manipulation capabilities, is an essential starting point for handling and analyzing data. Apache Airflow, while complex, provides a solid foundation for understanding workflow orchestration, which is crucial for automating and managing data pipelines. As you gain experience, you can explore more specialized data engineering tools like Dask or Modin for parallel processing and PySpark for distributed computing.

How can I determine the most suitable data engineering tools to address my team’s unique requirements and constraints?

Consider the size and complexity of your data because larger datasets might require tools like PySpark or Exaloop for efficient processing. Assess your team’s skillset as well—if Python expertise is strong, prioritize Python-centric tools, while diverse skills might call for tools with broader language support. Budgetary constraints also play a role, as some commercial tools offer advanced features but come with a cost.

Are there any specialized data engineering tools that can significantly improve the performance of my Python data pipelines?

Absolutely. Platforms like Exaloop are specifically designed to accelerate Python code execution by using multi-core processing and even GPU acceleration. These tools can drastically improve the speed and efficiency of your data pipelines, allowing you to process more data in less time and reduce overall infrastructure costs.